Here are the facts as I understand them:

And all of this, at the moment, is "allegedly".

1) CA put a survey onto Facebook which asked lots of questions to find out what sort of X you were (you know - "You're Phoebe Buffay! You're in Gryffindor!").

2) Because the app was built to work on Facebook it also collected your name, and some basic details about you - the sort of stuff you see if you look at a profile of a person you're not "friends" with. It would give them a name, age, things you 'like', and a rough idea of where you live. It may or may not have given them quite an accurate idea of where you lived, no-one can decide about that. It also told them who your friends were - again, the sort of thing you can see when you hit up someone's page that you don't know.

3) They did some wicked-smart stuff with the masses of data they collected, and built a psych profile on folks to find out how they might vote in the upcoming USA election. Then they looked for patterns of people connected to friends that didn't do the survey, and took a guess at how those friends would vote, based on the answers of the connected people, and where they lived.

4) Team Trump used this data to target adverts at the people they thought were most likely to be swayed to vote Republican, in a way that was most likely to sway them. It was super-granular, street-level stuff. People canvassed in those areas, saying things they'd been told to say which would be most likely to sway that particular area's vote. They also did the neighbours, because people living in close proximity are more likely to think in the same way.

5) Trump won, and everyone immediatly started asking "how?".

Now, first off, I think this is genius. Genius. This stuff is fucking hard, and if it was as effective as people are saying it was then it was a fucking work of art.

Second off - everyone does this. I used to work in Market Research, and at least one person there is doing this sort of stuff full time, and that was just a little research shop. The behemoths like Nielsen and Kantar are going to have massive swathes of people doing the same thing (and they'll tie it to a load of other information sources, like websites you visit or things you buy). Fun fact - market research is exempt from the part of the Data Protection Act that says you can't keep data that's not timely or relevant, so over the years they're going to have accumulated buckets of data that they never have to remove.

Third off - I don't think it can have worked as well as people are saying. As I said before, this stuff is hard, not only collecting the psyc profiles but using NLP to sway people. I think it may have worked to an extent, but not to a large extent.

Bottom line - I can't see how anyone did anything illegal. Immoral perhaps (on the part of CA) because they didn't tell people answering the questions what the data was really for. But it's incredibly rare that a market research survey will tell you what it's actually for - for a start, it will skew the results. Sometimes they tell you at the end, but not often.

Now, everyone is losing their tits over this, but all I can see is business as normal. CA did an excellent job, and in their desperate hunt to believe anything except for the fact that people actually wanted Trump to be president, America is jumping up and down and pointing finders.

So... Am I wrong? Tell me!

Interesting side note - almost every US report you see or hear about this describes CA as being 'a British company', which is correct, but it was the New York office that actually did the work.

And all of this, at the moment, is "allegedly".

1) CA put a survey onto Facebook which asked lots of questions to find out what sort of X you were (you know - "You're Phoebe Buffay! You're in Gryffindor!").

2) Because the app was built to work on Facebook it also collected your name, and some basic details about you - the sort of stuff you see if you look at a profile of a person you're not "friends" with. It would give them a name, age, things you 'like', and a rough idea of where you live. It may or may not have given them quite an accurate idea of where you lived, no-one can decide about that. It also told them who your friends were - again, the sort of thing you can see when you hit up someone's page that you don't know.

3) They did some wicked-smart stuff with the masses of data they collected, and built a psych profile on folks to find out how they might vote in the upcoming USA election. Then they looked for patterns of people connected to friends that didn't do the survey, and took a guess at how those friends would vote, based on the answers of the connected people, and where they lived.

4) Team Trump used this data to target adverts at the people they thought were most likely to be swayed to vote Republican, in a way that was most likely to sway them. It was super-granular, street-level stuff. People canvassed in those areas, saying things they'd been told to say which would be most likely to sway that particular area's vote. They also did the neighbours, because people living in close proximity are more likely to think in the same way.

5) Trump won, and everyone immediatly started asking "how?".

Now, first off, I think this is genius. Genius. This stuff is fucking hard, and if it was as effective as people are saying it was then it was a fucking work of art.

Second off - everyone does this. I used to work in Market Research, and at least one person there is doing this sort of stuff full time, and that was just a little research shop. The behemoths like Nielsen and Kantar are going to have massive swathes of people doing the same thing (and they'll tie it to a load of other information sources, like websites you visit or things you buy). Fun fact - market research is exempt from the part of the Data Protection Act that says you can't keep data that's not timely or relevant, so over the years they're going to have accumulated buckets of data that they never have to remove.

Third off - I don't think it can have worked as well as people are saying. As I said before, this stuff is hard, not only collecting the psyc profiles but using NLP to sway people. I think it may have worked to an extent, but not to a large extent.

Bottom line - I can't see how anyone did anything illegal. Immoral perhaps (on the part of CA) because they didn't tell people answering the questions what the data was really for. But it's incredibly rare that a market research survey will tell you what it's actually for - for a start, it will skew the results. Sometimes they tell you at the end, but not often.

Now, everyone is losing their tits over this, but all I can see is business as normal. CA did an excellent job, and in their desperate hunt to believe anything except for the fact that people actually wanted Trump to be president, America is jumping up and down and pointing finders.

So... Am I wrong? Tell me!

Interesting side note - almost every US report you see or hear about this describes CA as being 'a British company', which is correct, but it was the New York office that actually did the work.

Unsurprisingly, I disagree.

Based on what?

I say it's an incredibly dubious practice. Legal yes, moral no. Plus Facebook have to take a lot of flak here for letting it happen.

You missed the bit about them offering to send Eastern European girls round to politicians so they can get footage of them being naughty for blackmail purposes (edit: which I think is probably very illegal).

Also yeah I don't blame them, I blame FB for not just allowing but encouraging this sort of thing.

Also yeah I don't blame them, I blame FB for not just allowing but encouraging this sort of thing.

LewieP wrote:

You missed the bit about them offering to send Eastern European girls round to politicians so they can get footage of them being naughty for blackmail purposes (edit: which I think is probably very illegal).

That's nothing to do with what everyone is currently kicking off about, though.

Lonewolves wrote:

I say it's an incredibly dubious practice. Legal yes, moral no. Plus Facebook have to take a lot of flak here for letting it happen.

Letting a survey happen?

I thought the issue was twofold:

1) The people who did the survey agreed to let their info be used for "marketing purposes" (or whatever the small print - that they didn't read - said), however what that survey did was take their info, and all of the info of the people they were connected to, too ("as well" is more grammatically correct, but when else do you get a chance to say tutu, got to take them when you see them.) The exact details of what they scraped isn't clear, but my assumption is that it is more than can be scraped from the public profile, otherwise what's the big deal

2) CA bods get filmed saying they can blackmail, bribe, entrap, put up fake news etc... to rig elections. Understandably people go "hang on a second".

1) The people who did the survey agreed to let their info be used for "marketing purposes" (or whatever the small print - that they didn't read - said), however what that survey did was take their info, and all of the info of the people they were connected to, too ("as well" is more grammatically correct, but when else do you get a chance to say tutu, got to take them when you see them.) The exact details of what they scraped isn't clear, but my assumption is that it is more than can be scraped from the public profile, otherwise what's the big deal

2) CA bods get filmed saying they can blackmail, bribe, entrap, put up fake news etc... to rig elections. Understandably people go "hang on a second".

Grim... wrote:

Lonewolves wrote:

I say it's an incredibly dubious practice. Legal yes, moral no. Plus Facebook have to take a lot of flak here for letting it happen.

Letting a survey happen?

Having such lax security so that all sorts of personal data is collected by the survey. Come on, Grim…. I can't tell if you're playing devil's advocate here or not. If you are, please stop.

Lonewolves wrote:

Grim... wrote:

Lonewolves wrote:

I say it's an incredibly dubious practice. Legal yes, moral no. Plus Facebook have to take a lot of flak here for letting it happen.

Letting a survey happen?

Having such lax security so that all sorts of personal data is collected by the survey. Come on, Grim…. I can't tell if you're playing devil's advocate here or not. If you are, please stop.

One person's lax security is another person's 'your data is our business model'.

Grim... wrote:

LewieP wrote:

You missed the bit about them offering to send Eastern European girls round to politicians so they can get footage of them being naughty for blackmail purposes (edit: which I think is probably very illegal).

That's nothing to do with what everyone is currently kicking off about, though.

Well, it was part of the same investigation, but yes.

I thought they had got more of the info on friends than just a list of names of whatnot, and FB had allowed them to harvest further details that they shouldn’t have been allowed to take.

I think a lot of it is not just the privacy aspect but the specifics of what was then done (by all sorts of people) in terms of the targeted messages. Like, they weren’t of the ‘Trump will lower taxes and build a wall’ common GOP approach but were more of the ‘Proof that Killary eats babies and Obama is a Muslim’ type clickbait encouraging people to vote based on mad lies.

Cras wrote:

Lonewolves wrote:

Grim... wrote:

Lonewolves wrote:

I say it's an incredibly dubious practice. Legal yes, moral no. Plus Facebook have to take a lot of flak here for letting it happen.

Letting a survey happen?

Having such lax security so that all sorts of personal data is collected by the survey. Come on, Grim…. I can't tell if you're playing devil's advocate here or not. If you are, please stop.

One person's lax security is another person's 'your data is our business model'.

If you’re not paying for the product, you are the product.

Grim... wrote:

1) CA put a survey onto Facebook

Not quite:

https://www.theguardian.com/news/2018/m ... s-election

Quote:

The data was collected through an app called thisisyourdigitallife, built by academic Aleksandr Kogan, separately from his work at Cambridge University. Through his company Global Science Research (GSR), in collaboration with Cambridge Analytica, hundreds of thousands of users were paid to take a personality test and agreed to have their data collected for academic use.

However, the app also collected the information of the test-takers’ Facebook friends, leading to the accumulation of a data pool tens of millions-strong. Facebook’s “platform policy” allowed only collection of friends’ data to improve user experience in the app and barred it being sold on or used for advertising.

However, the app also collected the information of the test-takers’ Facebook friends, leading to the accumulation of a data pool tens of millions-strong. Facebook’s “platform policy” allowed only collection of friends’ data to improve user experience in the app and barred it being sold on or used for advertising.

Lonewolves wrote:

Grim... wrote:

Lonewolves wrote:

I say it's an incredibly dubious practice. Legal yes, moral no. Plus Facebook have to take a lot of flak here for letting it happen.

Letting a survey happen?

Having such lax security so that all sorts of personal data is collected by the survey. Come on, Grim…. I can't tell if you're playing devil's advocate here or not. If you are, please stop.

I don't really think you understand what happened.

These scandals are always useful for people to think a bit harder on the problems of using social media too much. But there's nothing here that's surprising, and wasn't the Obama campaign lauded for being pioneers in using big data? I don't doubt for a minute that this is just regular stuff that many campaigns too.

The Trump election was a tragedy, but this news just makes me shrug my shoulders. Sometimes I really want to close my facebook account but i still need to be reminded about birthdays.

The Trump election was a tragedy, but this news just makes me shrug my shoulders. Sometimes I really want to close my facebook account but i still need to be reminded about birthdays.

Curiosity wrote:

If you’re not paying for the product, you are the product.

a) how much do you pay for beex

b) even when you're paying for the product, you are often still the product. cf any traditional media that sells adverts: newspapers, magazines, television, ...

Doctor Glyndwr wrote:

Grim... wrote:

1) CA put a survey onto Facebook

Not quite:

https://www.theguardian.com/news/2018/m ... s-election

Quote:

The data was collected through an app called thisisyourdigitallife, built by academic Aleksandr Kogan, separately from his work at Cambridge University. Through his company Global Science Research (GSR), in collaboration with Cambridge Analytica, hundreds of thousands of users were paid to take a personality test and agreed to have their data collected for academic use.

However, the app also collected the information of the test-takers’ Facebook friends, leading to the accumulation of a data pool tens of millions-strong. Facebook’s “platform policy” allowed only collection of friends’ data to improve user experience in the app and barred it being sold on or used for advertising.

However, the app also collected the information of the test-takers’ Facebook friends, leading to the accumulation of a data pool tens of millions-strong. Facebook’s “platform policy” allowed only collection of friends’ data to improve user experience in the app and barred it being sold on or used for advertising.

Indeed, I was just about to write that but had to reply to yet another tiresome "this can't really be your opinion lul" post from the Ivory tower department.

I think the actual information collected from the friends was key. At the moment, I've seen nothing to suggest is isn't anything you could get from page-scraping.

Curiosity wrote:

Cras wrote:

Lonewolves wrote:

Grim... wrote:

Lonewolves wrote:

I say it's an incredibly dubious practice. Legal yes, moral no. Plus Facebook have to take a lot of flak here for letting it happen.

Letting a survey happen?

Having such lax security so that all sorts of personal data is collected by the survey. Come on, Grim…. I can't tell if you're playing devil's advocate here or not. If you are, please stop.

One person's lax security is another person's 'your data is our business model'.

If you’re not paying for the product, you are the product.

That's fine (it's not but for the sake of argument let's say it is

), but CA abused Facebook's ToS as DocG stated above. FB harvesting data for their own nefarious means is one thing, but allowing a third-party to do it is another matter entirely.

), but CA abused Facebook's ToS as DocG stated above. FB harvesting data for their own nefarious means is one thing, but allowing a third-party to do it is another matter entirely. Doctor Glyndwr wrote:

Curiosity wrote:

If you’re not paying for the product, you are the product.

a) how much do you pay for beex

Like I've not mined Beex for data.

Lonewolves wrote:

CA abused Facebook's ToS as DocG stated above

Read it again. If CA is a third party how can they have been involved with Facebook's TOS?

Grim... wrote:

Doctor Glyndwr wrote:

Grim... wrote:

1) CA put a survey onto Facebook

Not quite:

https://www.theguardian.com/news/2018/m ... s-election

Quote:

The data was collected through an app called thisisyourdigitallife, built by academic Aleksandr Kogan, separately from his work at Cambridge University. Through his company Global Science Research (GSR), in collaboration with Cambridge Analytica, hundreds of thousands of users were paid to take a personality test and agreed to have their data collected for academic use.

However, the app also collected the information of the test-takers’ Facebook friends, leading to the accumulation of a data pool tens of millions-strong. Facebook’s “platform policy” allowed only collection of friends’ data to improve user experience in the app and barred it being sold on or used for advertising.

However, the app also collected the information of the test-takers’ Facebook friends, leading to the accumulation of a data pool tens of millions-strong. Facebook’s “platform policy” allowed only collection of friends’ data to improve user experience in the app and barred it being sold on or used for advertising.

Indeed, I was just about to write that but had to reply to yet another tiresome "this can't really be your opinion lul" post from the Ivory tower department.

Sorry if I offended you. I was just genuinely surprised that it was your sincere opinion.

Doctor Glyndwr wrote:

Grim... wrote:

1) CA put a survey onto Facebook

Not quite:

https://www.theguardian.com/news/2018/m ... s-election

Quote:

The data was collected through an app called thisisyourdigitallife, built by academic Aleksandr Kogan, separately from his work at Cambridge University. Through his company Global Science Research (GSR), in collaboration with Cambridge Analytica, hundreds of thousands of users were paid to take a personality test and agreed to have their data collected for academic use.

However, the app also collected the information of the test-takers’ Facebook friends, leading to the accumulation of a data pool tens of millions-strong. Facebook’s “platform policy” allowed only collection of friends’ data to improve user experience in the app and barred it being sold on or used for advertising.

However, the app also collected the information of the test-takers’ Facebook friends, leading to the accumulation of a data pool tens of millions-strong. Facebook’s “platform policy” allowed only collection of friends’ data to improve user experience in the app and barred it being sold on or used for advertising.

Ok, there was something that was missing that makes everything worse. But aren't we used to tech giants basically ignoring laws? Uber has been operating against EU laws and national laws for years, so it's surprising that facebook pulls something like this?

I really hate conspiracy theories, but I make an exception when it comes to tech giants. I'm sure this is the least evil that they're capable to do.

Grim... wrote:

Doctor Glyndwr wrote:

Curiosity wrote:

If you’re not paying for the product, you are the product.

a) how much do you pay for beex

Like I've not mined Beex for data.

Now send me Latvian hookers.

Grim... wrote:

Lonewolves wrote:

CA abused Facebook's ToS as DocG stated above

Read it again. If CA is a third party how can they have been involved with Facebook's TOS?

Seems pretty clear. The platform policy allowed them to collect data, but then they sold it on against Facebook's policy.

Lonewolves wrote:

Grim... wrote:

Lonewolves wrote:

CA abused Facebook's ToS as DocG stated above

Read it again. If CA is a third party how can they have been involved with Facebook's TOS?

Seems pretty clear. The platform policy allowed them to collect data, but then they sold it on against Facebook's policy.

They didn't sell it, they bought it.

Although other places say that they built the whole thing, so

Quote:

A psychology professor named Aleksandr Kogan who was working with Cambridge Analytica asked to buy Kosinski's data. When Kosinski declined, the Times reports that Cambridge paid Kogan more than $800,000 to create his own personality app to harvest data from Facebook users.

https://arstechnica.com/tech-policy/201 ... explained/

No, they bought data from someone who was violating the T&Cs.

Edit: wot Grim... said.

Edit: wot Grim... said.

Grim... wrote:

Lonewolves wrote:

Grim... wrote:

Lonewolves wrote:

CA abused Facebook's ToS as DocG stated above

Read it again. If CA is a third party how can they have been involved with Facebook's TOS?

Seems pretty clear. The platform policy allowed them to collect data, but then they sold it on against Facebook's policy.

They didn't sell it, they bought it.

Although other places say that they build the whole thing, so

Stop editing stuff.

If that's true then Kogan is to blame.

-edit- wait, Kogan was specifically paid by CA to build the app?

I edited a comma into a post that was nine years old last week.

Grim... wrote:

I think the actual information collected from the friends was key. At the moment, I've seen nothing to suggest is isn't anything you could get from page-scraping.

I can't get a definitive answer to this. It's an old API that FB closed in 2015 or so, just after Kogan's app did its thing. However, I think it's extensive -- apps could read a lot of Friends-only data that belonged to your friends. It wasn't just names. Consider this:https://www.theguardian.com/news/2018/m ... -parakilas

Quote:

The test automatically downloaded the data of friends of people who took the quiz, ostensibly for academic purposes. Cambridge Analytica has denied knowing the data was obtained improperly, and Kogan maintains he did nothing illegal and had a “close working relationship” with Facebook.

While Kogan’s app only attracted around 270,000 users (most of whom were paid to take the quiz), the company was then able to exploit the friends permission feature to quickly amass data pertaining to more than 50 million Facebook users.

“Kogan’s app was one of the very last to have access to friend permissions,” Parakilas said, adding that many other similar apps had been harvesting similar quantities of data for years for commercial purposes. Academic research from 2010, based on an analysis of 1,800 Facebooks apps, concluded that around 11% of third-party developers requested data belonging to friends of users.

...

Parakilas said he was unsure why Facebook stopped allowing developers to access friends data around mid-2014, roughly two years after he left the company. However, he said he believed one reason may have been that Facebook executives were becoming aware that some of the largest apps were acquiring enormous troves of valuable data.

He recalled conversations with executives who were nervous about the commercial value of data being passed to other companies.

“They were worried that the large app developers were building their own social graphs, meaning they could see all the connections between these people,” he said. “They were worried that they were going to build their own social networks.”

Facebook wouldn't have been nervous if the harvested data was trivial. While Kogan’s app only attracted around 270,000 users (most of whom were paid to take the quiz), the company was then able to exploit the friends permission feature to quickly amass data pertaining to more than 50 million Facebook users.

“Kogan’s app was one of the very last to have access to friend permissions,” Parakilas said, adding that many other similar apps had been harvesting similar quantities of data for years for commercial purposes. Academic research from 2010, based on an analysis of 1,800 Facebooks apps, concluded that around 11% of third-party developers requested data belonging to friends of users.

...

Parakilas said he was unsure why Facebook stopped allowing developers to access friends data around mid-2014, roughly two years after he left the company. However, he said he believed one reason may have been that Facebook executives were becoming aware that some of the largest apps were acquiring enormous troves of valuable data.

He recalled conversations with executives who were nervous about the commercial value of data being passed to other companies.

“They were worried that the large app developers were building their own social graphs, meaning they could see all the connections between these people,” he said. “They were worried that they were going to build their own social networks.”

Grim…, considering how our opinions on internet privacy, big corporations, capitalism and personal data are so diametrically opposed, we should probably just agree to disagree here to avoid a falling out.

@DoccyG True enough, but that's still just speculation. I guess we'll find out in time.

Grim... wrote:

Doctor Glyndwr wrote:

Grim... wrote:

1) CA put a survey onto Facebook

Not quite:

https://www.theguardian.com/news/2018/m ... s-election

Quote:

The data was collected through an app called thisisyourdigitallife, built by academic Aleksandr Kogan, separately from his work at Cambridge University. Through his company Global Science Research (GSR), in collaboration with Cambridge Analytica, hundreds of thousands of users were paid to take a personality test and agreed to have their data collected for academic use.

However, the app also collected the information of the test-takers’ Facebook friends, leading to the accumulation of a data pool tens of millions-strong. Facebook’s “platform policy” allowed only collection of friends’ data to improve user experience in the app and barred it being sold on or used for advertising.

However, the app also collected the information of the test-takers’ Facebook friends, leading to the accumulation of a data pool tens of millions-strong. Facebook’s “platform policy” allowed only collection of friends’ data to improve user experience in the app and barred it being sold on or used for advertising.

Indeed, I was just about to write that but had to reply to yet another tiresome "this can't really be your opinion lul" post from the Ivory tower department.

I think the actual information collected from the friends was key. At the moment, I've seen nothing to suggest is isn't anything you could get from page-scraping.

That seems to be different from what I understand from what I’ve read in The Guardian.

Facebook will give personal data to people for certain usages (improvement of service, for example). It seems they took this data and then used it for political means (well, they used it for cash money means, but by selling their wares in the political arena).

At a minimum there’s a very strong implication that they had a lot more data than was publicly available.

Grim... wrote:

@DoccyG True enough, but that's still just speculation. I guess we'll find out in time.

https://techcrunch.com/2015/04/28/faceb ... shut-down/

Quote:

It was always kind of shady that Facebook let you volunteer your friends’ status updates, check-ins, location, interests and more to third-party apps. While this let developers build powerful, personalized products, the privacy concerns led Facebook to announce at F8 2014 that it would shut down the Friends data API in a year. Now that time has come, with the forced migration to Graph API v2.0 leading to the friends’ data API shutting down, and a few other changes happening on April 30.

https://techcrunch.com/2014/05/02/f8/

Quote:

Facebook announced plans to stop letting developers pull data from users’ friends, such as their photos, birthdays, status updates, and checkins.

Why? Because the idea that anyone could give someone else’s data to a developer without their permission was always kind of shady. This should boost a perception of privacy on the Facebook platform, but also deny developers the ability to build apps like photo album browsers, search engines, calendars, and location maps that could compete with Facebook’s own products.

Why? Because the idea that anyone could give someone else’s data to a developer without their permission was always kind of shady. This should boost a perception of privacy on the Facebook platform, but also deny developers the ability to build apps like photo album browsers, search engines, calendars, and location maps that could compete with Facebook’s own products.

Facebook's official API docs page doesn't go back far enough to show the old API

https://developers.facebook.com/docs/gr ... og/archive

Here's an archived image from 2013 showing what data of yours could be accessed by 3P apps installed by your friends:

https://nakedsecurity.sophos.com/2013/0 ... formation/

I'd say that's extensive.

Lonewolves wrote:

Grim…, considering how our opinions on internet privacy, big corporations, capitalism and personal data are so diametrically opposed, we should probably just agree to disagree here to avoid a falling out.

You are allowed to have a discussion with differing opinions on both sides without falling out, you know

Doctor Glyndwr wrote:

I'd say that's extensive.

Ah, yes indeed. And there's no reason to assume they didn't grab everything they could.

The "things they like" would be the most important one for working out stuff about them, I suspect.

Grim... wrote:

The "things they like" would be the most important one for working out stuff about them, I suspect.

The entire history of their posts and all their photos would be more useful.

Doctor Glyndwr wrote:

Grim... wrote:

The "things they like" would be the most important one for working out stuff about them, I suspect.

The entire history of their posts and all their photos would be more useful.

Depends how good the AI would have been at the time.

[edit]D'uh

Grim... wrote:

Depends how good the AI would have been at the time.

[edit]D'uh

[edit]D'uh

You fucking plank.

Right?!

It's also worth reflecting on these points: https://www.theregister.co.uk/2018/03/1 ... _director/

First, Facebook's reaction when it discovered what had happened:

Also Facebook's PR line is that this is not a breach:

It doesn't join the dots that (to my mind) suggest that if this wasn't a breach, then everything must have been working just as it intended, which might be a worse PR place for it to be in.

First, Facebook's reaction when it discovered what had happened:

Quote:

Facebook knew about the incident in 2015 and sought assurances from all concerned that the data had been deleted. What has prompted Friday’s suspension of Cambridge Analytica was Wylie going public to various media outlets with some extraordinary claims about how the data was used.

...

He claims to have deleted the data before being formally asked to do so by Facebook in 2016, a year after the misuse was discovered by the social media firm. All he had to do was fill in a form saying he had deleted and Facebook were satisfied with that.

...

He claims to have deleted the data before being formally asked to do so by Facebook in 2016, a year after the misuse was discovered by the social media firm. All he had to do was fill in a form saying he had deleted and Facebook were satisfied with that.

Also Facebook's PR line is that this is not a breach:

Quote:

The claim that this is a data breach is completely false. Aleksandr Kogan requested and gained access to information from users who chose to sign up to his app, and everyone involved gave their consent. People knowingly provided their information, no systems were infiltrated, and no passwords or sensitive pieces of information were stolen or hacked.

It doesn't join the dots that (to my mind) suggest that if this wasn't a breach, then everything must have been working just as it intended, which might be a worse PR place for it to be in.

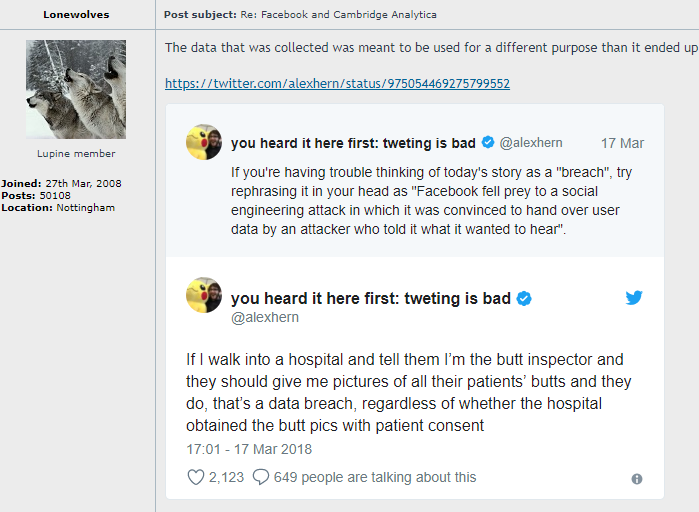

The data that was collected was meant to be used for a different purpose than it ended up being used for, which is the issue I think.

https://twitter.com/alexhern/status/975054469275799552

https://twitter.com/alexhern/status/975054469275799552

If I walk into a hospital and tell them I’m the butt inspector and they should give me pictures of all their patients’ butts and they do, that’s a data breach, regardless of whether the hospital obtained the butt pics with patient consent

— you heard it here first: tweting is bad (@alexhern) March 17, 2018

Why has the Twitter embed function turned to shit?

Seems okay. What's it done wrong?

If you share from twitter, you get some s=xx gunk on the URL that breaks the embed. Just delete it.

Doctor Glyndwr wrote:

If you share from twitter, you get some s=xx gunk on the URL that breaks the embed. Just delete it.

Nope, it's:

Code:

https://twitter.com/alexhern/status/975054469275799552

Grim... wrote:

Seems okay. What's it done wrong?

It's just pasted the tweet rather than doing the fancy embed you implemented a while back.

Are you using a phone?

Gosh, that's confusing.

Weird. No, I'm doing it from a PC. Copied the link from twitter.com using Firefox.